Introduction

Welcome to the Azure AKS Kubernetes deployment security Workshop.

We won’t spend too much time on the presentation of AKS, the service that has been very popular in recent months.

In brief, AKS is Microsoft’s new managed container orchestration service. It is gradually replacing Azure Container service and focuses only on the Cloud Native Computing foundation (CNCF) Kubernetes orchestration engine.

In the last workshop: Create a Kubernetes cluster with Azure AKS using Terraform, we have discussed the Azure Kubernetes Service (AKS) basics, the Infrastructure as Code (IaC) mechanism with a focus on Hashicorp Terraform and how to deploy a Kubernetes cluster with AKS using Terraform.

With this lab, you’ll go through tasks that will help you master the basic and more advanced topics required to secure Azure AKS Kubernetes cluster at the deployment level based on the following mechanisms and technologies:

- ✅Azure AD (AAD)

- ✅AKS with Role-Based Access Control (RBAC)

- ✅Container Network Interface (CNI)

- ✅Azure Network policy

- ✅Azure Key Vault

This article is part of a series:

- Secure AKS at the deployment: part 1

- Secure AKS at the deployment: part 2

- Secure AKS at the deployment: part 3

Assumptions and Prerequisites

- You have basic knowledge of Azure

- Have basic knowledge of Kubernetes

- You have Terraform installed in your local machine

- You have basic experience with Terraform

- Azure subscription: Sign up for an Azure account, if you don’t own one already. You will receive USD200 in free credits.

Implement Network Policies to secure AKS at the deployment

We saw in the first section the basics and the prerequisites to integrate Azure Active Directory authentication and RBAC management on an AKS cluster. In a second part, we continued our exploration of the use of Azure Active Directory (AAD) to secure AKS.

In this part, we finish our exploration of securing AKS by looking at Kubernetes Network Policies.

1- Network policy options in AKS

Azure provides two ways to implement network policy. You choose a network policy option when you create an AKS cluster. The policy option can’t be changed after the cluster is created:

- Azure’s own implementation, called Azure Network Policies.

- Calico Network Policies, an open-source network and network security solution founded by Tigera.

Both implementations use Linux IPTables to enforce the specified policies. Policies are translated into sets of allowed and disallowed IP pairs. These pairs are then programmed as IPTable filter rules.

Differences between Azure and Calico policies and their capabilities

| Capability | Azure | Calico |

|---|---|---|

| Supported platforms | Linux | Linux |

| Supported networking options | Azure CNI | Azure CNI and kubenet |

| Compliance with Kubernetes specification | All policy types supported | All policy types supported |

| Additional features | None | Extended policy model consisting of Global Network Policy, Global Network Set, and Host Endpoint. For more information on using the calicoctl CLI to manage these extended features, see calicoctl user reference. |

| Support | Supported by Azure support and Engineering team | Calico community support. For more information on additional paid support, see Project Calico support options. |

| Logging | Rules added / deleted in IPTables are logged on every host under /var/log/azure-npm.log | For more information, see Calico component logs |

2- Proposal of Azure Network Policies in AKS

By default, the AKS cluster manages access for applications exposed outside, through the main service associated with the cluster, by using the Network Security Group associated with the NICs of the nodes in the cluster.

Whenever an application is exposed outside, a public IP address is made available, with the corresponding load balancing rule.

It is a purely Azure IaaS filtering and the pods can always communicate with each other.

For added security, we use network policies that provide IP table functionality at the pod level.

To see network policies in action, let’s create and then expand on a policy that defines traffic flow:

- Deny all traffic to pod

- Allow traffic based on namespace.

First, you need to create an AKS cluster that supports network policy.

ℹ️ Important

The network policy feature can only be enabled when the cluster is created.

3- Deny all inbound traffic to a pod

In terms of basic security, we can set up a Network Policy, applied at the time of deployment, by default blocking all incoming traffic (ingress) on a given namespace

You can also clearly see that traffic is dropped when the network policy is applied.

For the sample application environment and traffic rules, let’s first create a namespace called development to run the example pods:

Let’s create an example back-end that runs NGINX. This back-end pod can be used to simulate a sample back-end web-based application.

Then, create another pod and attach a terminal session to test that you can successfully reach the default NGINX webpage:

wget the default NGINX webpage:

The default NGINX webpage return a sample output such as:

Exit out of the attached terminal session. The test pod is automatically deleted.

Create and apply a network policy

Now that you’ve confirmed you can use the basic NGINX webpage on the sample back-end pod, create a network policy to deny all traffic. Create a file named backend-policy.yaml and paste the following YAML manifest. This manifest uses a podSelector to attach the policy to pods that have the app:webapp,role:backend label, like your sample NGINX pod.

Test the network policy

Let’s see if you can use the NGINX webpage on the back-end pod again. Create another test pod and attach a terminal session:

use wget to see if you can access the default NGINX webpage. This time, set a timeout value to 2 seconds. The network policy now blocks all inbound traffic, so the page can’t be loaded, as shown in the following example:

4- Allow traffic only from within a defined namespace

In the previous examples, you created a network policy that denied all traffic. Another common need is to limit traffic to only within a given namespace. If the previous examples were for traffic in a development namespace, create a network policy that prevents traffic from another namespace, such as production, from reaching the pods.

First, create a new namespace to simulate a production namespace:

Schedule a test pod in the production namespace that is labeled as app=webapp,role=frontend. Attach a terminal session:

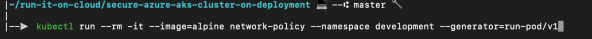

kubectl run –rm -it frontend –image=alpine –labels app=webapp,role=frontend –namespace production –generator=run-pod/v1

Then, use wget to confirm that you can access the default NGINX webpage:

The network policy doesn’t look at the namespaces, only the pod labels. The following example output shows the default NGINX webpage returned:

<title>Welcome to nginx!</title> […]

Exit out of the attached terminal session. The test pod is automatically deleted.

Update the network policy

Let’s update the ingress rule namespaceSelector section to only allow traffic from within the development namespace. Edit the backend-policy.yaml manifest file as shown in the following example:

Apply the updated network policy:

Test the updated network policy

kubectl run –rm -it frontend –image=alpine –labels app=webapp,role=frontend –namespace development –generator=run-pod/v1

To see that the network policy allows the traffic:

wget -qO- http://backend

Traffic is allowed because the pod is scheduled in the namespace that matches what’s permitted in the network policy.

Conclusion

In this article, we have looked at the capabilities of Kubernetes Network Policies, which complement the security of an AKS cluster, at a glance. Other features are coming on AKS such as AAD Pod Identities that allows pods authentication on Azure, or private clusters to avoid exposure of Kubernetes APIs on a public url.

That’s all folks!

That’s all for this workshop, thanks for reading! Later posts may cover best practices for running fully configured cluster with hundreds of microservices deployed in one click!

Be the first to be notified when a new article, running it on Cloud or Kubernetes experiment is published.

Don’t miss the next article!

2 thoughts on “Secure AKS at the deployment – part 3 –”